Hyper-parameter optimization example with random forests¶

An example with usage an input with a dict format.

Out:

iter 0...

iter 1...

iter 2...

iter 3...

iter 4...

iter 5...

iter 6...

iter 7...

iter 8...

iter 9...

iter 10...

iter 11...

iter 12...

iter 13...

iter 14...

iter 15...

iter 16...

iter 17...

iter 18...

iter 19...

iter 20...

iter 21...

iter 22...

iter 23...

iter 24...

iter 25...

iter 26...

iter 27...

iter 28...

iter 29...

iter 30...

iter 31...

iter 32...

iter 33...

iter 34...

iter 35...

iter 36...

iter 37...

iter 38...

iter 39...

iter 40...

iter 41...

iter 42...

iter 43...

iter 44...

iter 45...

iter 46...

iter 47...

iter 48...

iter 49...

iter 50...

iter 51...

iter 52...

iter 53...

iter 54...

iter 55...

iter 56...

iter 57...

iter 58...

iter 59...

iter 60...

iter 61...

iter 62...

iter 63...

iter 64...

iter 65...

iter 66...

iter 67...

iter 68...

iter 69...

iter 70...

iter 71...

iter 72...

iter 73...

iter 74...

iter 75...

iter 76...

iter 77...

iter 78...

iter 79...

iter 80...

iter 81...

iter 82...

iter 83...

iter 84...

iter 85...

iter 86...

iter 87...

iter 88...

iter 89...

iter 90...

iter 91...

iter 92...

iter 93...

iter 94...

iter 95...

iter 96...

iter 97...

iter 98...

iter 99...

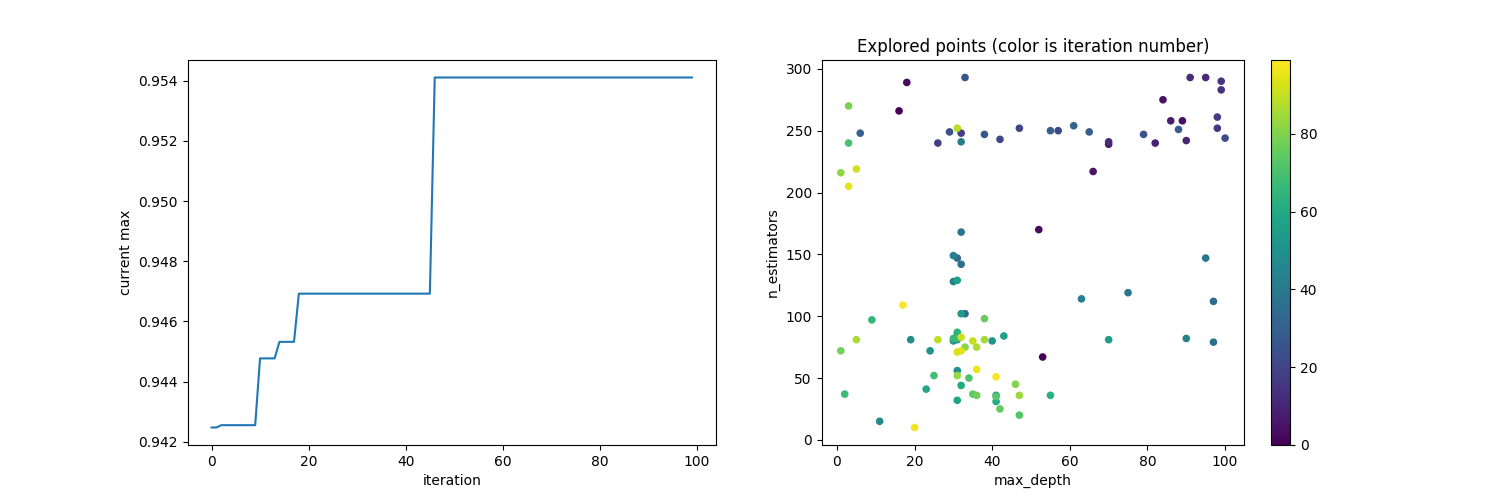

best input : {'max_depth': 31, 'n_estimators': 81}, best output : 0.95

import numpy as np

import matplotlib.pyplot as plt

from scipy import maximum

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

from fluentopt import Bandit

from fluentopt.bandit import ucb_maximize

from fluentopt.utils import RandomForestRegressorWithUncertainty

from fluentopt.transformers import Wrapper

from sklearn.datasets import load_breast_cancer

np.random.seed(42)

data = load_breast_cancer()

data_X, data_y = data['data'], data['target']

def sampler(rng):

return {'max_depth': rng.randint(1, 100), 'n_estimators': rng.randint(1, 300)}

def feval(d):

max_depth = d['max_depth']

n_estimators = d['n_estimators']

clf = RandomForestClassifier(n_jobs=-1, max_depth=max_depth, n_estimators=n_estimators)

scores = cross_val_score(clf, data_X, data_y, cv=5, scoring='accuracy')

return np.mean(scores) - np.std(scores)

opt = Bandit(sampler=sampler, score=ucb_maximize,

model=Wrapper(RandomForestRegressorWithUncertainty()))

n_iter = 100

for i in range(n_iter):

print('iter {}...'.format(i))

x = opt.suggest()

y = feval(x)

opt.update(x=x, y=y)

idx = np.argmax(opt.output_history_)

best_input = opt.input_history_[idx]

best_output = opt.output_history_[idx]

print('best input : {}, best output : {:.2f}'.format(best_input, best_output))

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 5))

iters = np.arange(len(opt.output_history_))

ax1.plot(iters, maximum.accumulate(opt.output_history_))

ax1.set_xlabel('iteration')

ax1.set_ylabel('current max')

X = [[inp['max_depth'], inp['n_estimators']] for inp in opt.input_history_]

X = np.array(X)

sc = ax2.scatter(X[:, 0], X[:, 1], c=iters, cmap='viridis', s=20)

ax2.set_xlabel('max_depth')

ax2.set_ylabel('n_estimators')

ax2.set_title('Explored points (color is iteration number)')

fig.colorbar(sc)

plt.show()

Total running time of the script: ( 4 minutes 30.655 seconds)